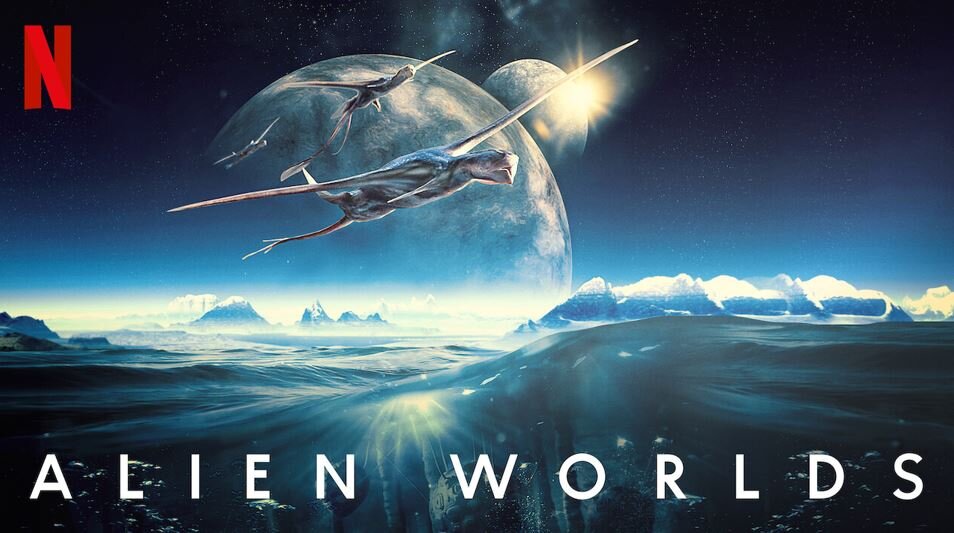

I recently watched Alien Worlds, and something just stuck with me (actually there is more to that story, but ask about this to me later). I thought about flying in a new way that I never did. A bird flying is actually manipulating the air form of matter. It’s essentially the same thing as me feeling the ground under my feet, probably just nicer and smoother. While i don’t actively pay attention to existence of air, for another species like birds, air is the main reality they live in. Air to them is something “tangible”.

As a very logical connection, this made me think about multimodality in machine intelligence.

I’ve always been kind of skeptical of the strong embodiment arguments, like Dreyfus claiming that human intelligence isn’t about rules or data, but about being and doing and living things in the world. I agree that it is impossible to split humans’ cognitive processes from our hardware, our bodies – but it doesn’t mean that the cognitive pathways that we build can be founded in non-biological systems. In fact, it doesn’t even have to be the same pathway, but if it leads from same input to the same output, maybe you could even say that these two pathways are equivalent in many aspects. I guess my take about Dreyfus is that, I disagree with his “either you’re fully embodied or you’re not intelligent” approach – embodiment is the whole story, but I can see how it unlocks this layer of experience that you can’t really get any other way. This is why we convert different modalities of data in forms that machines can read: you turn images into pixels, frequencies into binary bits, a nice stroll into thousands of rows of coordination… So multimodality matters. What about the birds flying in the air though?

One of the most interesting readings of this semester was embedding homeostasis in soft robotics. The paper argues that in a world that changes constantly, intelligent agents should have their own meta-goal of self-preservation—like living organisms do. Not feelings in the human sense, but internal states they need to regulate—maybe they’d also have a way to care about their behavior. We went down the rabbit hole of whether this is any different from instrumental convergence and whether this is simply bad, especially if we can not align AI. But I agree that if we assume certain high level value alignment is in place, robots trying to survive can unlock different reward mechanisms beyond what we hardcode into models. What feels uncanny (and actually, also fascinating) to me here is not the alignment problem but the level of sensory sensitivity the robots could have. We find the weather too hot or too cold or comfortable. Yes we can look up the temperature, but it’s not the data we can process. We can only match a certain temperature to a certain sensory coldness in our memory. What if you could actually exactly know what 13 Celsius feels like? How it settles on your skin, how your muscles adjust? What if it actually tastes a certain way, like 13 Celsius weather is actually more salty – while 25 Celsius is maybe like more rubber-like? What if there is a reason that you like the sea view more than the mountain view, because there is a certain color mix that appeals to your DNA combination more than others, and that’s why you like that nobody-knows-the-name mountain more than the ones in the national parks? I feel like the actual breakthrough in robotics will only come when all human senses – and beyond – can become data. Just like how machine intelligence tells us about human intelligence, maybe feeling robots can unlock so much more about our environment to us than we ever experienced.

No Comments / Yorum Bulunmuyor